Sensory neurons encode their inputs into sequences of neural spikes. For example, retinal ganglion cells in a human eye send information about our visual environment at a rate of ~1Mb/s through a million or so axons in the optic nerve to the visual cortex in the central brain.

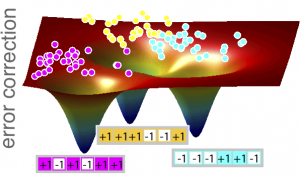

An illustration of optimal coding strategy when single neurons are noisy. Multidimensional stimuli (schematically depicted as colored dots) get mapped into neural binary codewords representing the patterns of firing / silence of 6 neurons. This picture is conceptually analogous to a frustrated (spin-glass) Ising model with the energy landscape where the codewords correspond to local energy minima. From PNAS 107: 14419 (2010).

One set of questions about this process deals with how single neurons behave – for instance, how they adapt their coding strategy to changes in overall illumination and contrast. The second set of questions deals with the nature of the neural code. Today it is possible to record the behavior of many neurons at once and thus ask whether each neuron provides independent pieces of information about the environment, or if the code is collective, i.e. if information is encoded in population patterns of activity. How could we learn this code? How does the brain learn it during development? Can we discover coding principles that extend from the retina to other parts of the nervous system? Are the codes used by the brain optimized for some function that can be mathematically quantified?

In collaboration with several experimental labs we are exploring issues of neural coding, adaptation, learning and building neural representations; we try to find phenomenological and tractable models which we can connect to data and for which we can develop physics intuition.